Task 1 – Frontend, DAQ and On-line

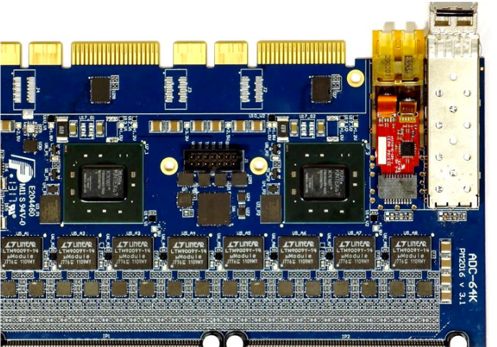

Data source: Information processing starts already at the detector level. For a successful operation one needs a good interplay between the detectors, the connected frontend electronics and the readout controllers to obtain detector specific time sliced data. The feature extraction on the FPGAs (field programmable gate arrays) requires HDL (VHDL, Verilog) programming and specific knowledge on the detectors. Software algorithms and hardware can partly be common for different detectors and experiments (FEE ASICs, ADC/TDC boards).

Data switching network: The time sliced data from the detector parts will be put together at the data acquisition (DAQ) network. The first stage consists of concentrator ASICs (e.g. CERN GBTx) in areas where radiation tolerance or high density is required or FPGA based concentrator boards in less demanding areas. A time distribution system with sub ns precision ensures the overall system synchronization, systems like SODA or White Rabbit, which is also used in the FAIR accelerator control system, are used in different layers of the readout chain. There are many common issues between CBM and PANDA, which calls for joint developments and unified designs, while keeping in mind differences, e.g. small events and FPGA based compute nodes as first event selection layer for PANDA vs. large events and an InfiniBand based Computer cluster for CBM.

Data reduction: Before writing the data to disk one needs to remove background and uninteresting events. Reduction levels of a factor 1000 need to be achieved. For this first level event selection (FLES) one needs to bring together experts of parallel programming, from the detectors and from the physics side. At this level new processing hardware on multicore CPUs and vector processing units (e.g. Xeon ISX-H, Nvidia Tesla GPU, ARMv8 64bit architecture) requires concurrent programming and new algorithms based on structures of arrays instead of arrays of structures to achieve the performance challenge. New data flow based models are to be employed with common tools for online and offline (project ALFA). Also the online calibration has to be prepared at this level.

Task 2 – Demonstrator

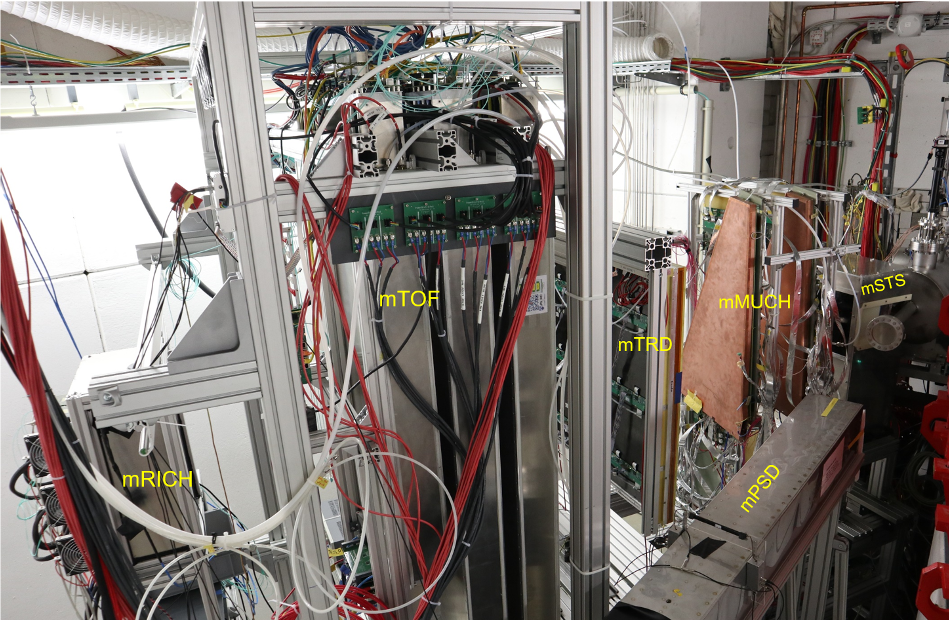

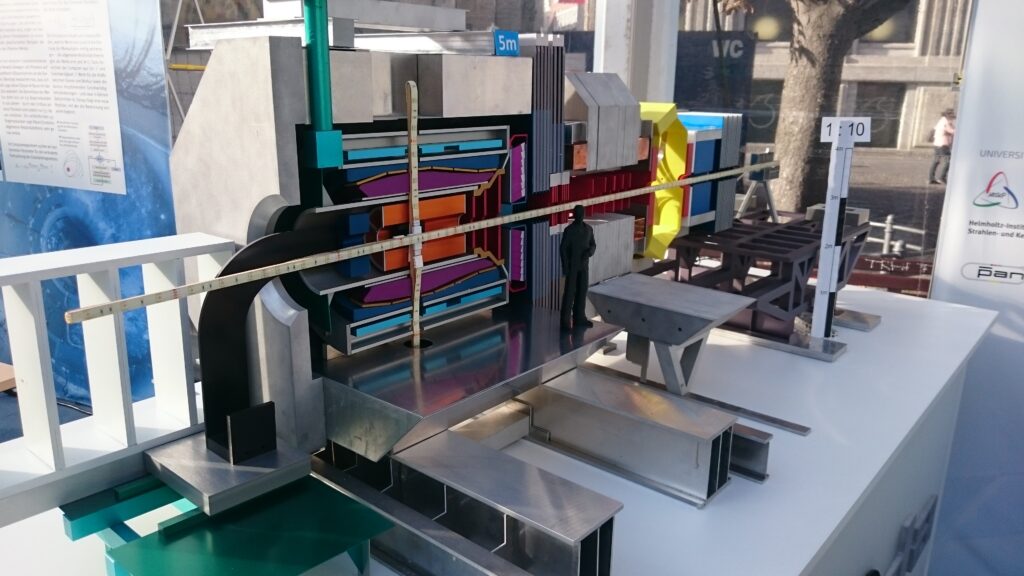

The hardware and software being developed in the frameworks of task 1 need to be tested in real life. The goal of this task is to set up demonstrator hardware to test the hard/software. For the PANDA experiment detectors with equipped frontend electronics will be put together at the FZJ (micro vertex detector MVD, straw tubes STT and forward endcap calorimeter EMC). All are connected by TRBs to the DAQ and will have a full SODAnet time distribution system. For the CBM experiment, a demonstrator system for the first-level event selector (FLES) will be set up in the context of the mCBM experiment at FAIR (Phase–0) that will be taking data starting in 2018. CBM frontend data will be aggregated and converted to micro time slices by a FPGA concentrator layer that feeds the data into entry nodes of a HPC cluster. The HPC nodes are interconnected by an Infiniband network and will be equipped with interface and accelerator cards, which allows to bring together the different developments in high-speed data acquisition, efficient timeslice building, and highly parallel online analysis in a common system.

Task 3 – Data analysis challenge

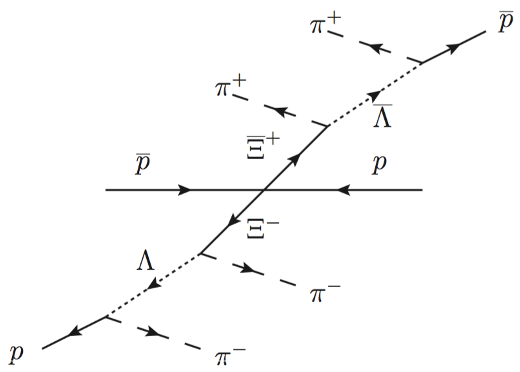

The remaining vast amount of data requires high-level data analysis tools and sophisticated partial wave analysis tools (PWA) where our present knowledge concerning existing resonances and their decay mechanisms needs to enter. In addition this task will provide the physics input for the online event selection. Even after online data reduction by up to three orders of magnitude, a vast amount of data – of the order of several PByte per month of run time at highest interaction rates – will be recorded by both CBM and PANDA. An efficient analysis of such a data volume poses a challenge to both the computing infrastructure and the high-level analysis tools, e.g., for partial wave analysis in case of PANDA, or for multi-particle correlations in case of CBM. Such tools will be developed on the basis of the FairRoot software framework. An offline computing model, including aspects of distributed computing, which accommodates the needs of CBM and PANDA has to be developed on the base of existing solutions and experiences from the LHC experiments.

Task 4 – Education and outreach

Dedicated schools for PhDs and young researchers on topics of the research network are planned. An important aspect to improve communication, education and information exchange is to send young researchers for a limited time to foreign research institutes. An emphasis will be put on the exchange of people between EU and non-EU countries.

Regularly performed outreach activities, especially for school children to attract them for physics and cutting edge technologies. This includes lectures to the public, lectures and practical training for school classes, hands-on training and working together with school children during vacation periods. Material for the outreach activities will be collected and made available for general use.